In Amazon S3 (Simple Storage Service), duplicate objects refer to files or objects within single or multiple buckets with identical content. These duplicates might occur for various reasons, such as accidental uploads, numerous uploads of the same file, or synchronization processes.

It’s important to note that duplicate objects can increase storage costs since each object is billed separately based on size and storage duration. Therefore, it is generally recommended that duplicate objects be managed efficiently by avoiding them through proper naming conventions or using versioning when necessary.

Duplicate objects in AWS S3 can present several challenges and potential problems. Duplicate objects consume additional storage space, leading to higher storage costs. Keeping track of multiple copies of the same data can become challenging, especially in environments with frequent data uploads and updates. Many duplicate objects within an S3 bucket can impact performance, especially when listing, accessing, or managing objects. It may result in slower response times and increased latency for bucket operations. Duplicate objects may raise compliance and governance concerns, especially in regulated industries where data duplication can lead to issues with data retention policies, data privacy regulations, and audit requirements.

OptScale can help mitigate these problems. It’s essential to audit S3 buckets for duplicate objects regularly. Additionally, the tool can help identify and address duplicate objects proactively.

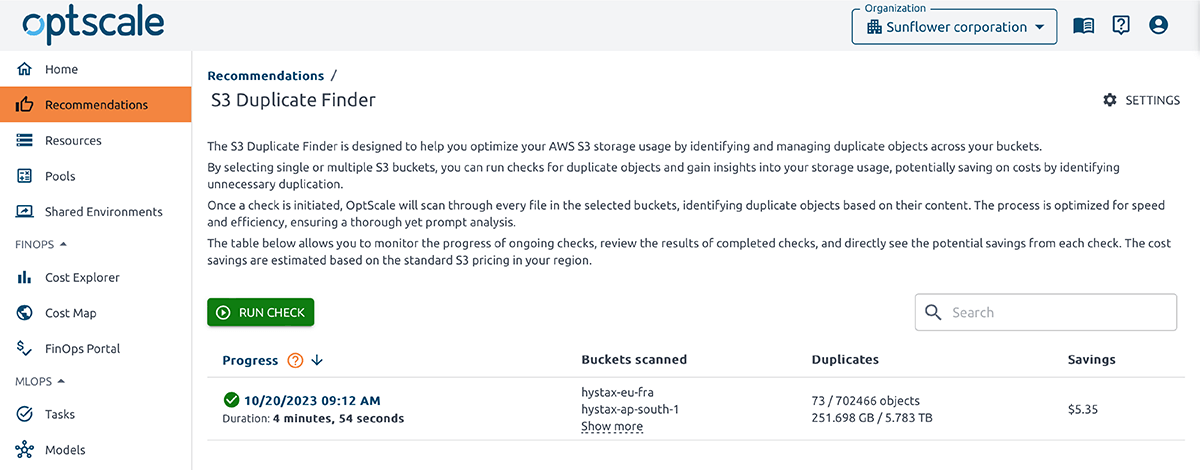

OptScale allows finding duplicate objects in AWS S3. The S3 Duplicate Finder (as it is called in the product) is designed to help you optimize your AWS S3 storage usage by identifying and managing duplicate objects across your buckets.

Selecting single or multiple S3 buckets allows you to run checks for duplicate objects and gain insights into your storage usage. By identifying unnecessary duplication, you can potentially save on costs.

Once a check is initiated, OptScale will scan through every file in the selected buckets, identifying duplicate objects based on their content. The process is optimized for speed and efficiency, ensuring a thorough yet prompt analysis.

How to run a check of duplicate objects in OptScale?

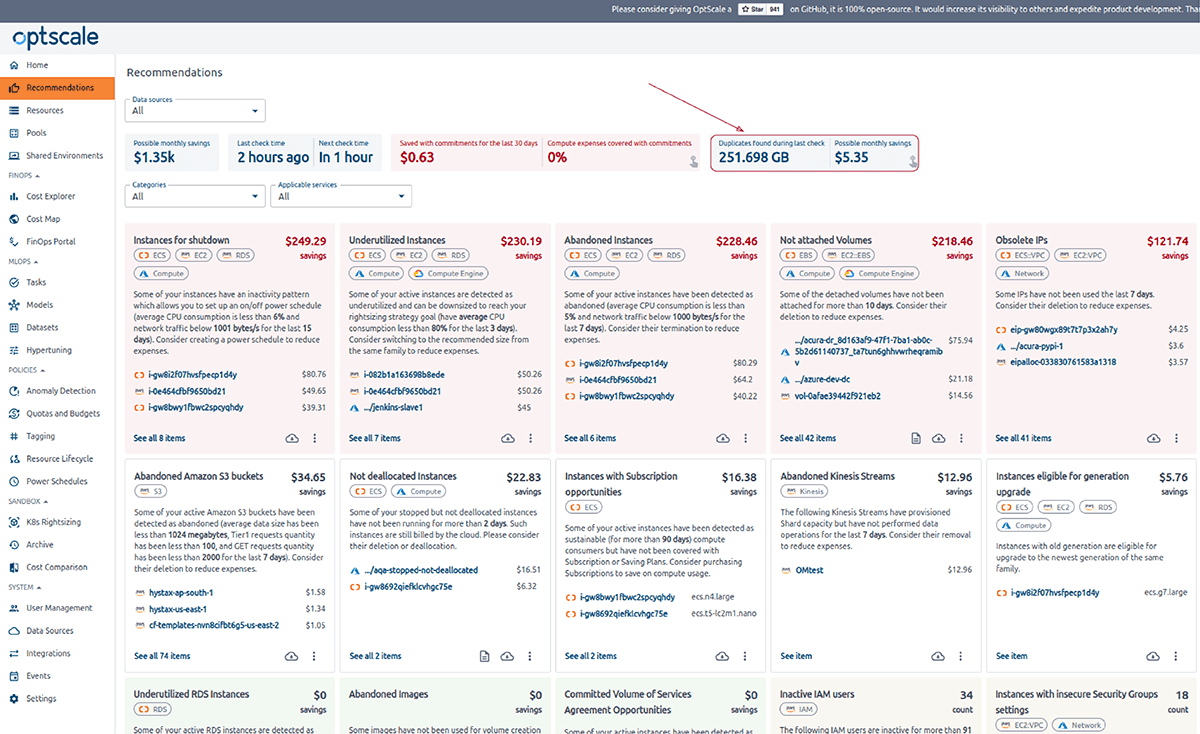

The entry point to the AWS S3 duplicate finder page is a ‘Go to S3 duplicate finder’ card on the ‘Recommendations’ page.

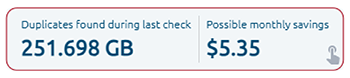

It shows duplicates found during the last check and possible monthly savings.

The card might be in different states, depending on the conditions.

No successfully finished checks or no checks were started

Last successful check info

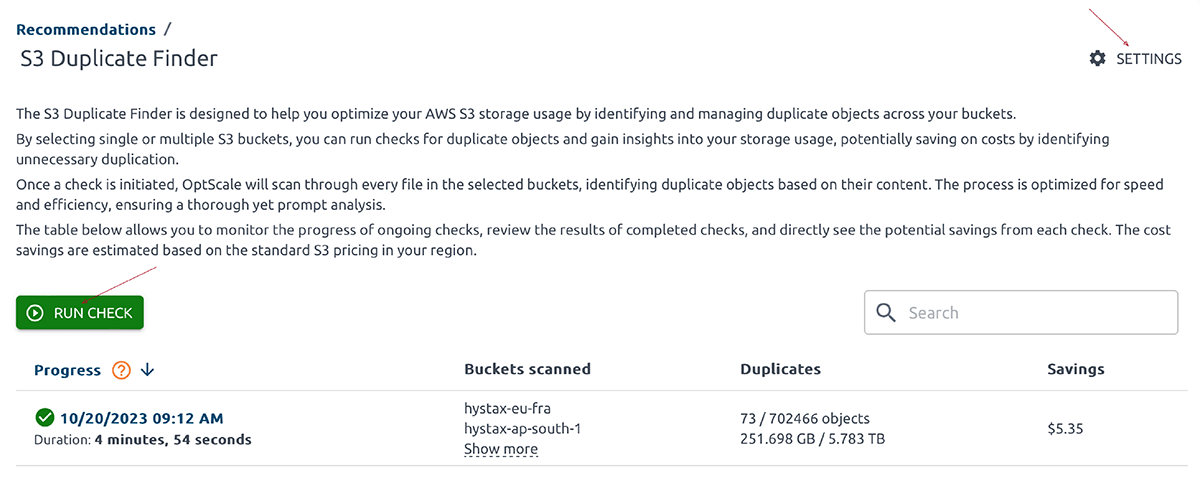

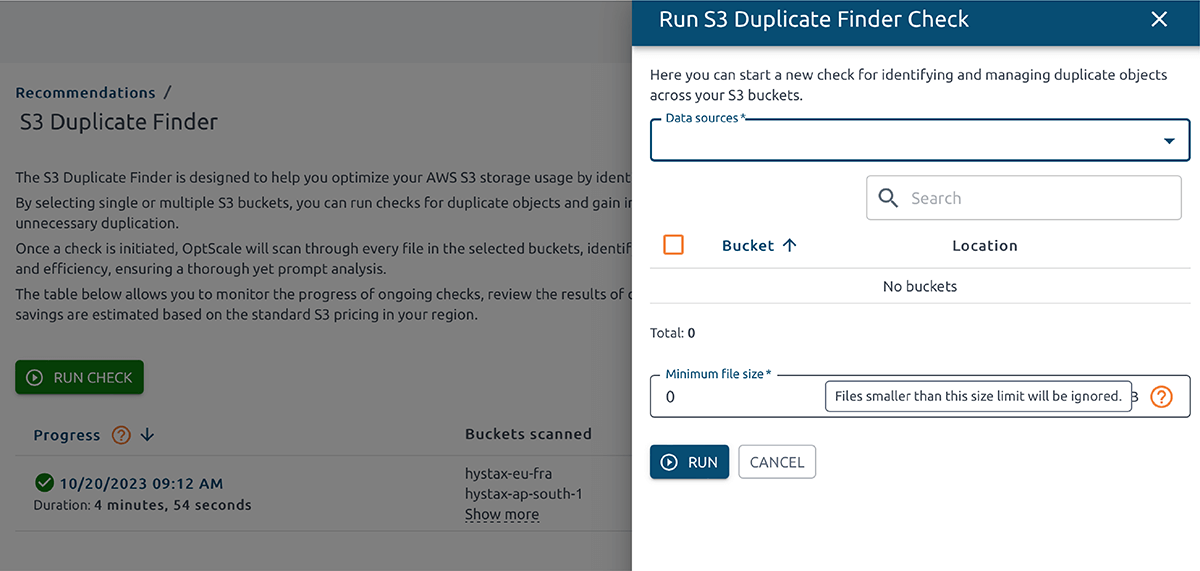

The card is clickable and leads you to a summary page. This page showcases a table of all check launches, providing the option to initiate a new check.

Table

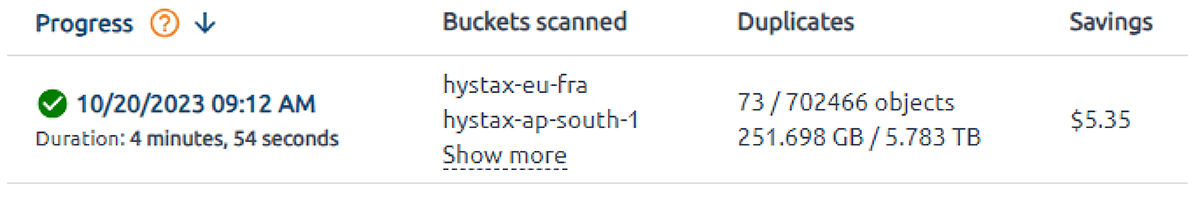

The tables present details, including the check’s initialization time, a list of scanned buckets with corresponding resource links, the total count of duplicated objects across all buckets, their sizes, and the overall savings.

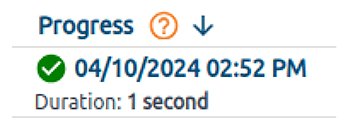

The “Progress” column indicates the current status of a specific check. The time label indicates the moment when a check was initiated. The successfully finished check has the status ‘Completed’.

Newly launched – ‘Created’. Wait until it is ‘Completed’ to get the information about duplicates.

The “Buckets Scanned” column lists all the scanned buckets. By default, only the names of the first two scanned buckets are displayed in this column. Сlick the “Show more” button to view the remaining names. The “Duplicates” column represents the number of duplicate objects found during a check. The “Savings” column indicates the potential cost savings for removing the identified duplicates.

Actions

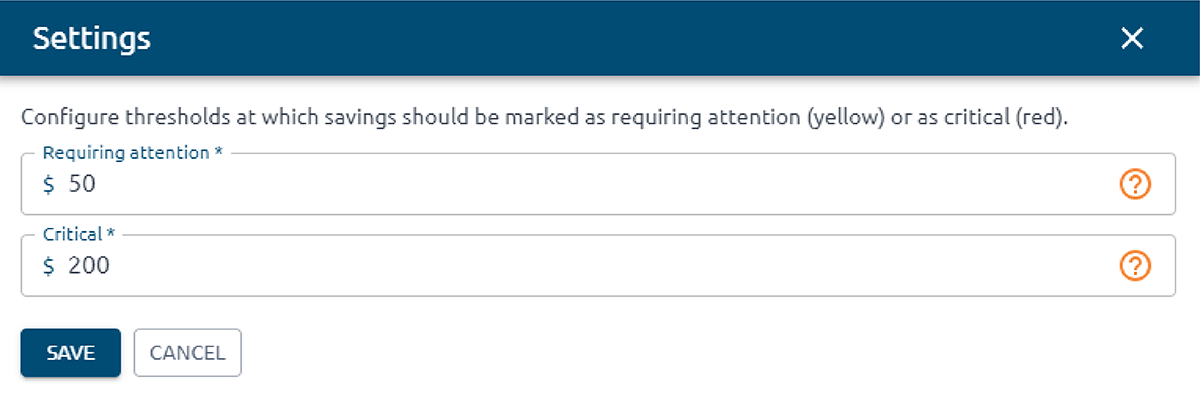

Settings

The “Settings” button triggers opening a side modal containing a form. This form enables users to configure savings threshold rules to colorize cells in a cross-duplicate matrix.

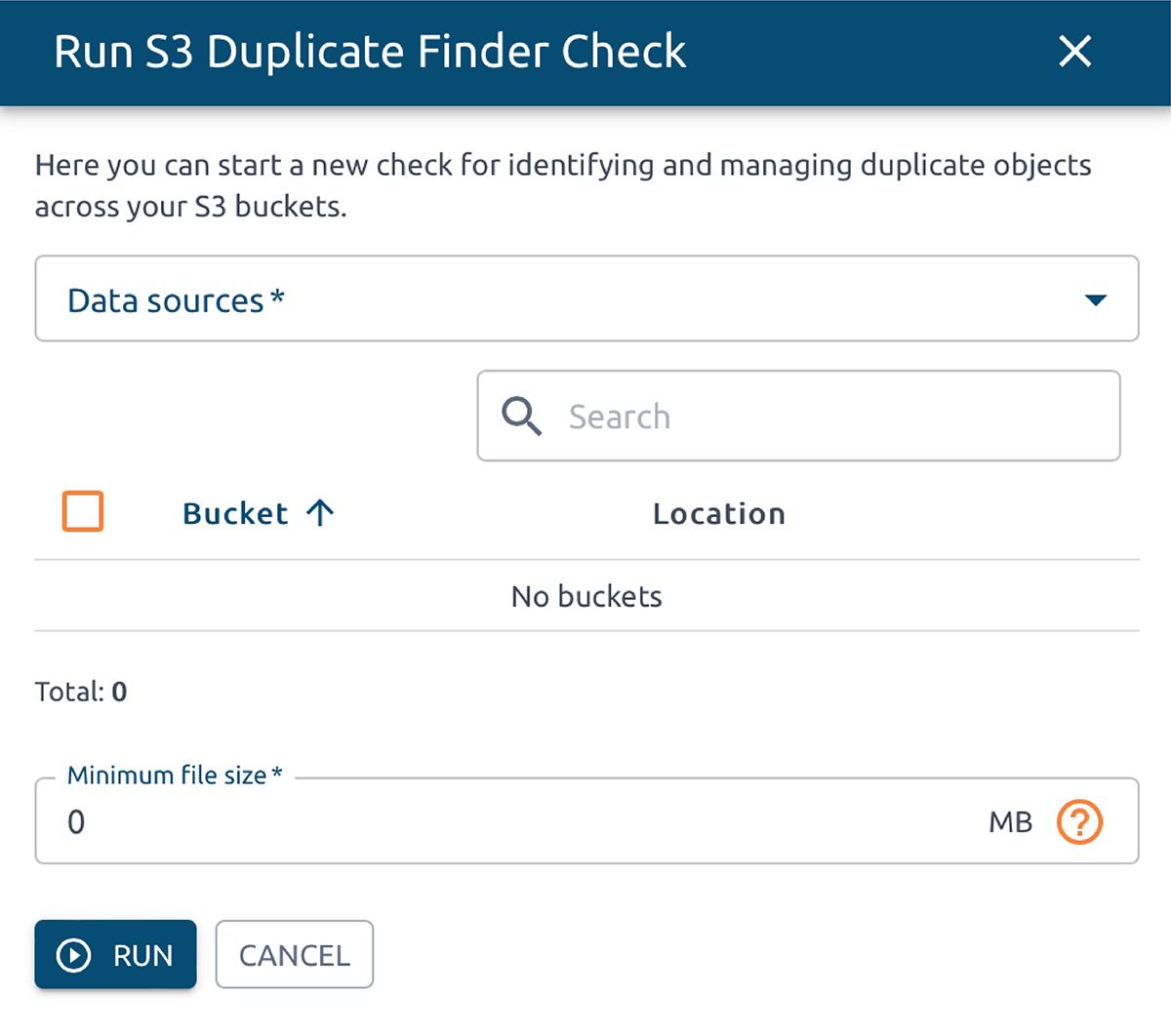

Run check

The “Run check” button triggers opening a side modal containing a form. This form enables users to configure and initiate a new check.

Free cloud cost optimization. Lifetime

Run S3 Duplicate check

The form allows selecting the data source type, buckets for duplicate checking, and setting a minimum file size threshold.

1. The ‘Data source’ multi-select enables the selection of the desired data sources where buckets will be checked.

2. The ‘Buckets’ table enables selecting the desired buckets to be checked for duplicates. The list of buckets depends on the selected data source. The maximum number of buckets per check is 100.

3. The ‘Minimum file size’ field allows you to specify the minimum file size threshold. Files that do not meet this requirement will be skipped during the check.

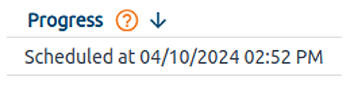

When ‘Run’, a note with the date and time of the running check appears.

When the check is finished, the cell content looks like this:

Click on this link to see a table with duplicates found.

Use this information to gain insights on minimizing your expenses and saving resources.

How to find duplicate objects in OptScale? S3 Duplicate Finder results overview

Clicking the summary card ‘Go to S3 duplicate finder’ on the ‘Recommendations’ tab of the solution will open an overview page displaying the results of a duplicate check. Then, select the desired item from the table that presents details.

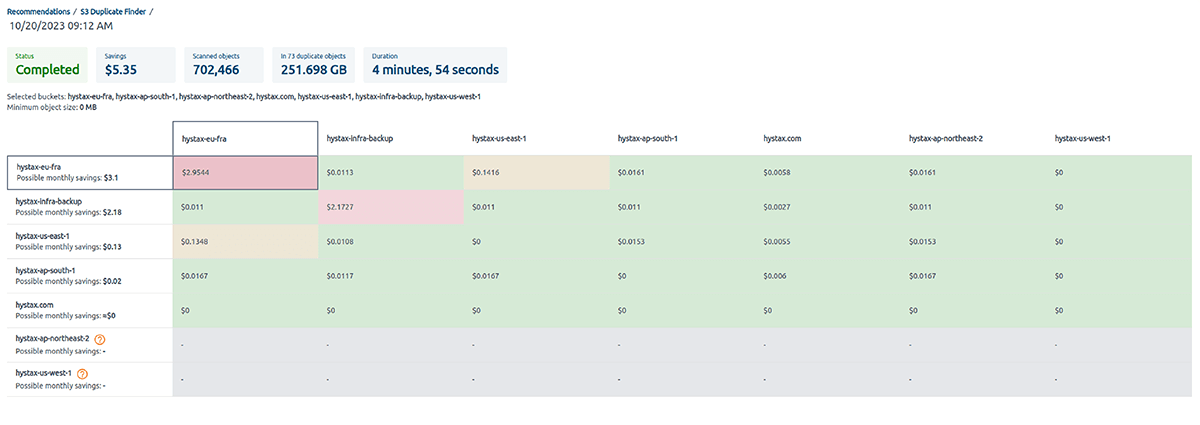

The shown page presents key information such as the check’s status, overall savings, number of scanned objects, the total number of duplicated objects across all buckets, and the duration of the check.

A cross-bucket duplicate table shows information about the duplicate amounts between specific buckets. The ‘From’ buckets are listed in the first column, and the ‘To’ buckets are listed in the first row. The intersections of rows and columns display possible savings per month. The table is sorted by ‘Possible savings’ per month in descending order.

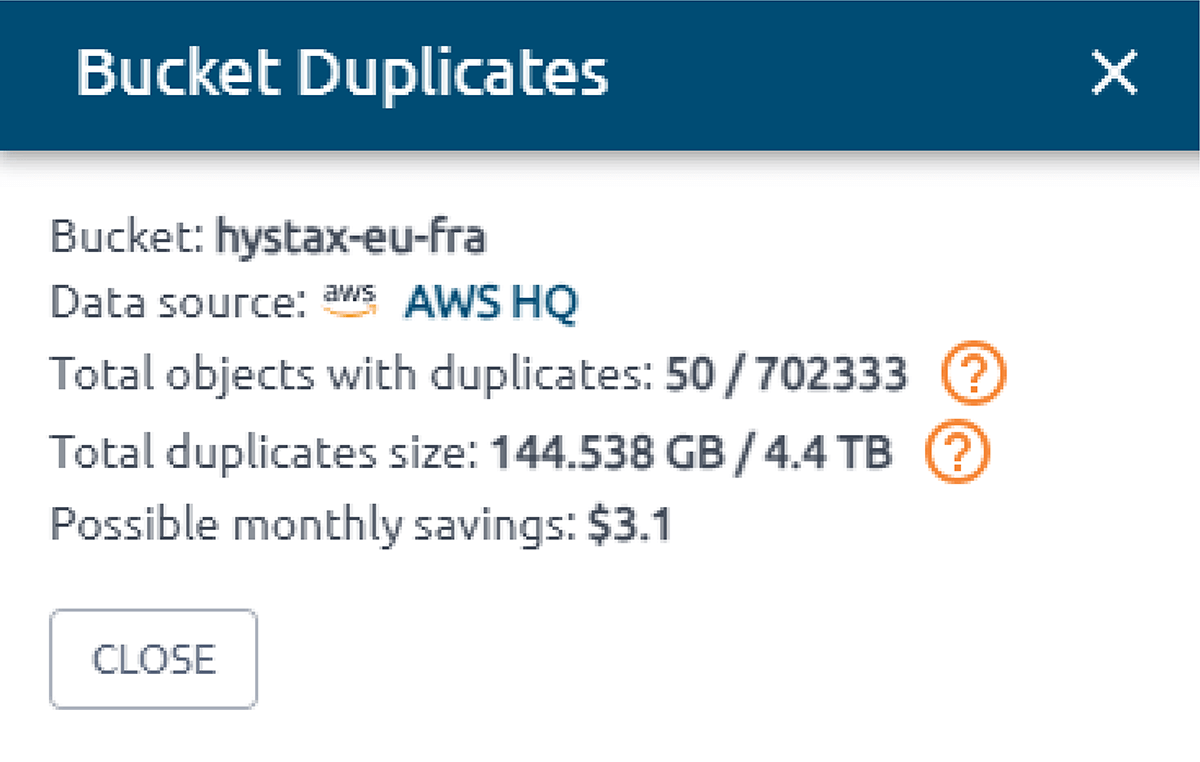

From bucket details

- Bucket name

- Possible savings per month

- Possible savings per month

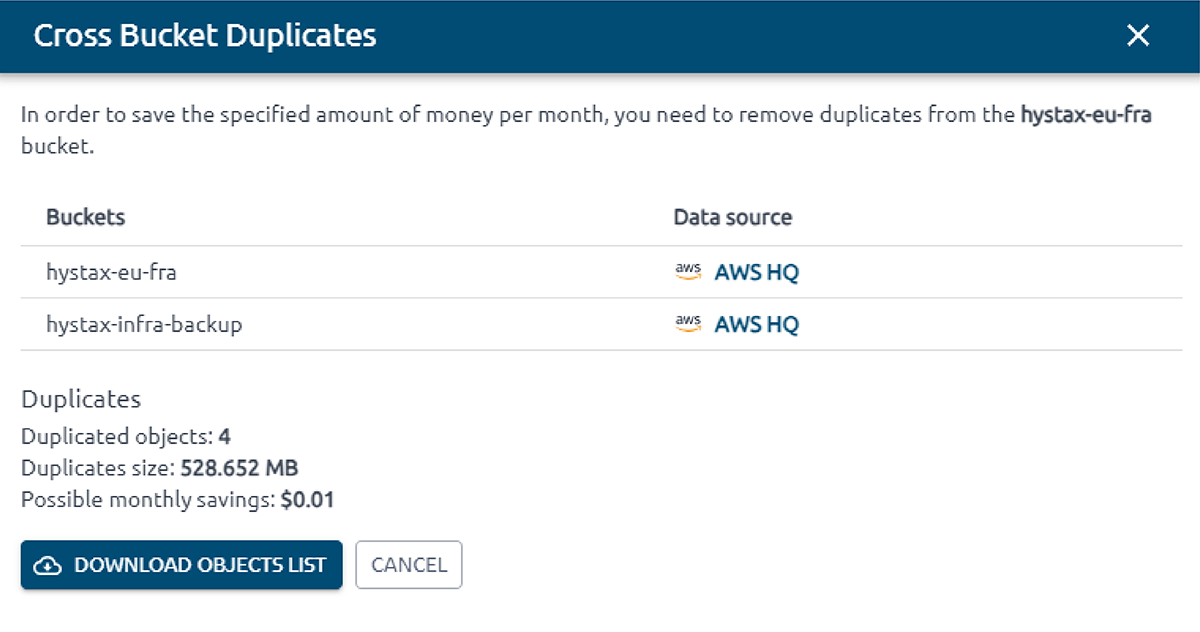

Cross-bucket details

Clicking on a cell in the table body opens a side modal with detailed information about cross-bucket duplicates.

The first is a ‘from’ bucket, and the second is a ‘to’ bucket. Download the object list for research.

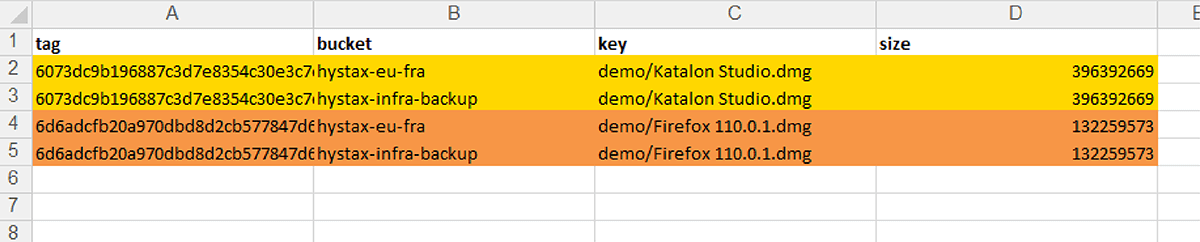

The table is designed so that the duplicates themselves, the buckets in which they are located are immediately visible, and a path to the duplicates (key column) and their size are also indicated. The tag column is the reference point for identifying duplicates; duplicates have the same column. By default, the table is sorted by the tag column, i.e., when you open a table, you immediately get easy-to-work data.

Find duplicates on the key path in the bucket and remove them if necessary to get possible savings.

OptScale Github project: https://github.com/hystax/optscale

We’d appreciate it if you would give us a Star.

The OptScale platform enables companies to track their cloud spending, maximize resource usage, and achieve efficiency. OptScale MLOps features significantly benefit ML/AI teams by allowing experiment profiling, hyperparameter tuning, enhancing performance, and cost optimization recommendations. OptScale ensures that your cloud and AI operations are managed cost-effectively and efficiently.